☁️ Deploying APIs with AKS (Azure Kubernetes Service)

In this advanced section of API Atlas, we move beyond Swagger, Redoc, and Postman to orchestrate full-stack deployments with AKS

. This isn’t just infrastructure, it’s a paradigm shift; AKS is where declarative APIs become live, resilient services.Why AKS? Because scaling APIs in production isn't about containers alone, it’s about observability, autoscaling, secure ingress, availability zones, and a declarative infrastructure that devs and ops can both trust; AKS bundles these capabilities into one tightly integrated Azure-native ecosystem.

🧐 AKS Architecture: What Lives Where?

- API Server

- Scheduler

- Etcd

- Azure RBAC

- Kubelet

- Container Runtime

- Pods/Deployments

- Ingress Controller

- Load Balancer

- DNS Routing

- REST APIs

- OpenAPI Specs

- Autoscaling

Figure 1. This architectural breakdown outlines AKS across four planes: Control Plane, Node Pool, Networking, and Application. Azure manages the control plane, featuring the Kubernetes API server, etcd, and load balancer health probes, so you can focus on pods and deployments.

Kubernetes is complex, but AKS abstracts away the toil. Think of it like an air traffic control system: you chart the flight path (YAML), define your aircrafts (pods), and AKS coordinates the sky (cluster); this division of labor makes DevOps scalable and cloud-native.

📦 CI/CD Into AKS: The Full Flow

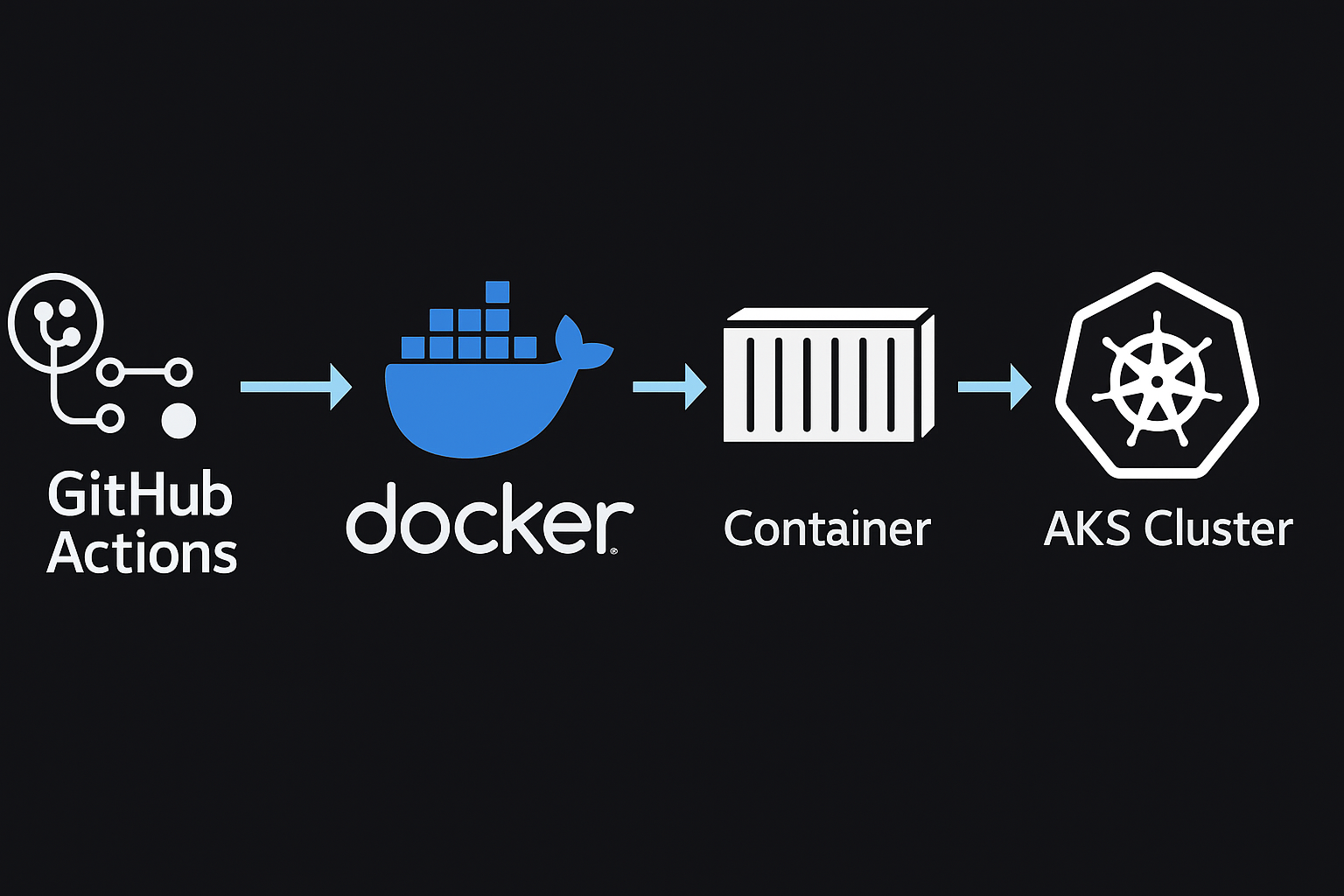

In your earlier stages of API Atlas, you validated OpenAPI specs in Swagger UI, tested endpoints via Postman, and created live reference docs with Redoc; the natural next step is turning those definitions into running services. This is the job of a CI/CD pipeline targeting AKS.

For example, a GitHub Actions pipeline might lint your YAML, validate schema consistency, run Postman tests in headless mode, and deploy to AKS using kubectl

or Helm; the entire flow becomes testable, traceable, and reproducible.💼 Who’s Using AKS for API Infrastructure?

Microsoft

Microsoft uses Azure Kubernetes Service (AKS) to power its internal engineering platforms and public APIs, including Azure DevOps and GitHub integration services. Their infrastructure strategy incorporates GitOps workflows using Azure Repos, Helm, and Bicep templates to manage API microservices at scale. By combining AKS with Azure Monitor, Microsoft enables detailed observability, cost tracking, and zero-downtime deployments for enterprise clients. This dogfooding of AKS across multiple teams allows Microsoft to validate new Kubernetes features while delivering highly available developer tools.

Adobe

Adobe uses AKS to operate containerized backend services that support Creative Cloud, Adobe Document Services, and API-based rendering engines. Their approach includes running AKS across multiple regions with service mesh capabilities like Istio to ensure global API availability and strong service discovery. Adobe's telemetry is deeply integrated with Azure Log Analytics and Defender for Containers, enabling advanced threat monitoring and continuous compliance. AKS lets them dynamically scale processing APIs for media, forms, and e-signature pipelines across cloud-native environments.

Cisco

Cisco integrates AKS into its hybrid cloud deployments to run edge-aware APIs across on-prem and public Azure regions. Their typical setup includes AKS clusters deployed with Terraform and linked into SecureX for real-time observability, incident correlation, and network policy enforcement. These Kubernetes clusters run Cisco’s microservices for infrastructure telemetry, device provisioning APIs, and operational dashboards. By using AKS, Cisco automates updates to services via CI/CD pipelines while adhering to strict compliance and availability standards for enterprise customers.

Starbucks

Starbucks relies on AKS to operate APIs behind its global mobile ordering, loyalty program, and in-store point-of-sale systems. Helm charts are used to configure and deploy these APIs consistently across geographies. AKS enables Starbucks to manage rolling updates for core services—like payment processing, customer data retrieval, and order queuing—while maintaining global uptime. With Azure DevOps pipelines, they validate new deployments via Helm linting, secrets management, and traffic mirroring, ensuring consistent delivery of services to millions of users daily.

These aren’t just brands, they're AKS case studies. Microsoft runs core services with AKS for internal testing and external scaling; Adobe runs analytics APIs on multi-region clusters; Starbucks leverages Helm for consistent rollout of their mobile ordering APIs. If you're preparing for DevOps interviews, this is your signal: AKS knowledge = readiness.

Understanding how GitOps, RBAC, and service meshes like Istio integrate into AKS clusters lets you contribute from day one to enterprise-grade systems.

🔍 Why Not ECS, GKE, or Bare Metal?

Great question. AKS competes with EKS

, GKE, and even bare-metal setups, but AKS wins when your stack already lives in Azure. With tight integration to Azure Active Directory, Azure Monitor, and Azure CLI, AKS is frictionless.You don’t need to self-manage Kubernetes masters, set up DNS zones manually, or configure Kubelet from scratch; it’s opinionated, fast, and cloud-native out of the box.

📘 From YAML to Running Services

Let’s zoom out and trace the entire journey:

- Start with an OpenAPI spec in Swagger UI

- Test and document the flow with Postman and Redoc

- Package endpoints with GitHub Actions

- Push to container registry (optional)

- Deploy YAML and Helm charts to AKS

Your YAML becomes a Deployment

, Service, or Ingress in AKS; and because it’s declarative, you can diff it, test it, and roll it back.If an API is like a product, then AKS is your assembly line: scalable, modular, and auto-healing; AKS even monitors pod health and applies restart policies based on liveness or readiness probes. Before we move on, test your understanding with this quick AKS deployment quiz below.

🚀 Quick Check: AKS Deployment Quiz

1. What does AKS stand for?

2. Which resource in Kubernetes defines CPU and memory limits?

3. What is the purpose of a readiness probe?

🎯 What’s Next: Kubernetes Config

Now that you’ve seen how APIs go from spec to deployment, we’ll go one layer deeper.

Next, you’ll configure Kubernetes manifests to fine-tune service behavior; setting replica counts, applying rolling updates, securing endpoints with RBAC, and attaching secrets from Key Vault.

In other words, this is where your YAML gets opinionated and your clusters become battle-tested.